DevOps Engineer

Kanishka specializes in Google Cloud Platform (GCP) and architecting automation solutions that simplify, scale, and secure cloud workloads.

Audit logs in Google Cloud Platform (GCP) track all user and service activity, making them vital for security, compliance, and cost analysis. We wanted a way to bring all BigQuery audit logs into a single BigQuery dataset so we could easily query, monitor, and visualize activity across projects.

One of our clients needed better visibility into their BigQuery usage, which was a major cost driver. Instead of going through a tedious manual setup, we built an automated solution that collects audit logs for BigQuery across the organization and sends them to a centralized BigQuery dataset in our project.

Centralized logs in BigQuery provide a unified source across projects:

As enticing as this sounds, manually enabling GCP audit logs involves multiple steps—adjusting IAM settings, enabling APIs, creating datasets, setting up log sinks, and assigning sink permissions. So obviously, this process is slow, error-prone, and hard to scale across multiple projects or clients. But here is the full proof solution!

To streamline this, we developed a Python script that:

Before running the script, make sure to install the required Python packages:

Download the Script

You can download the full automation script from my GitHub repository: Auto-BigQuery-Audits

Configuration

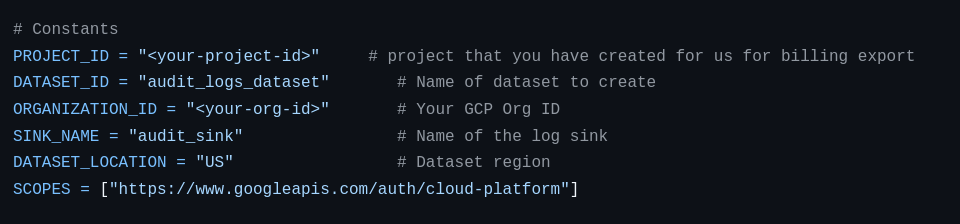

After downloading the script, open the audit_log_to_bigquery.py file from the repository and update the following variables at the top of the script with your specific project and organization details:

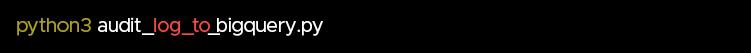

We then execute the script using:

The script:

Finally, we have manually granted the roles/bigquery.dataEditor role to this identity so that it can write logs to our BigQuery Dataset.

This setup isn't limited to BigQuery. By updating the sink’s filter, we can collect logs from other services (e.g., resource.type="gcs_bucket") or combine multiple filters for broader visibility. It’s reusable across different clients or environments. Allowing audits across various important services and their visualization.

Automating audit log collection has helped us simplify monitoring, ensure compliance, and deliver real-time insights into BigQuery usage to our real client base. But most of all, it ensured best practices are not just idealistic approaches, but realistic rituals!

For more information on GCP Optimization, implementing automated cloud governance solutions, or to explore CloudKeeper’s suite of FinOps and security tooling, please connect with our team today.

Speak with our advisors to learn how you can take control of your Cloud Cost