In modern cloud-native architectures, resiliency isn’t a bonus—it’s a baseline requirement. Just like a seemingly redundant Amazon Aurora setup can still suffer a ten-minute outage, LLM-based systems can grind to a halt if they're not properly wired to the outside world.

This write-up explores how the Model Context Protocol (MCP), when paired with Amazon Bedrock, eliminates the disconnect between foundation models and real-world systems. We’ll walk through the architecture, the root problems MCP solves, and the technical best practices that ensure availability, reliability, and intelligent automation.

The Scenario: Intelligent AI, Isolated from Action

Large Language Models (LLMs) like ChatGPT, Claude, and Titan are great at language reasoning—but by default, they’re siloed. They can’t access APIs, query AWS, or trigger workflows unless explicitly wired to external services. That’s why it is essential to select the right Large Language Model, based on your use case.

Before MCP, that meant:

- Manual integrations with every external tool

- Brittle workflows with no standardized interface

- Loss of context across multi-step chains

- Higher engineering overhead and maintenance risk

In short, intelligence without execution.

Digging Into the Root Cause

The real limitation wasn’t the models—it was the lack of a standard interface between models and tools.

The core problems:

- LLMs couldn’t invoke external APIs in a reliable, structured way.

- Ad-hoc code integrations lacked fault tolerance and reusability.

- Teams duplicated effort trying to reimplement similar connectors.

The failure mode was always the same: the model knew what to do, but had no clean path to do it.

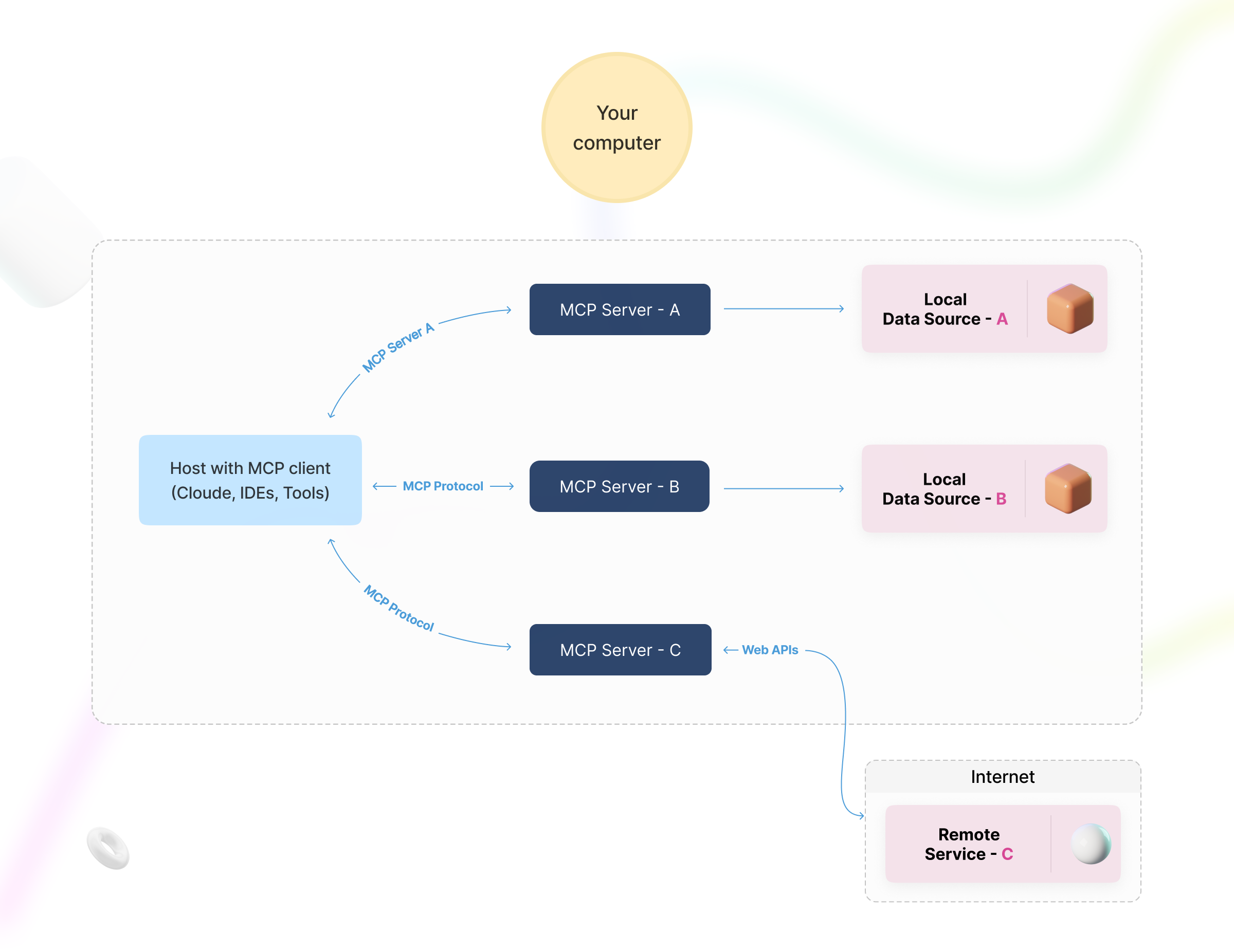

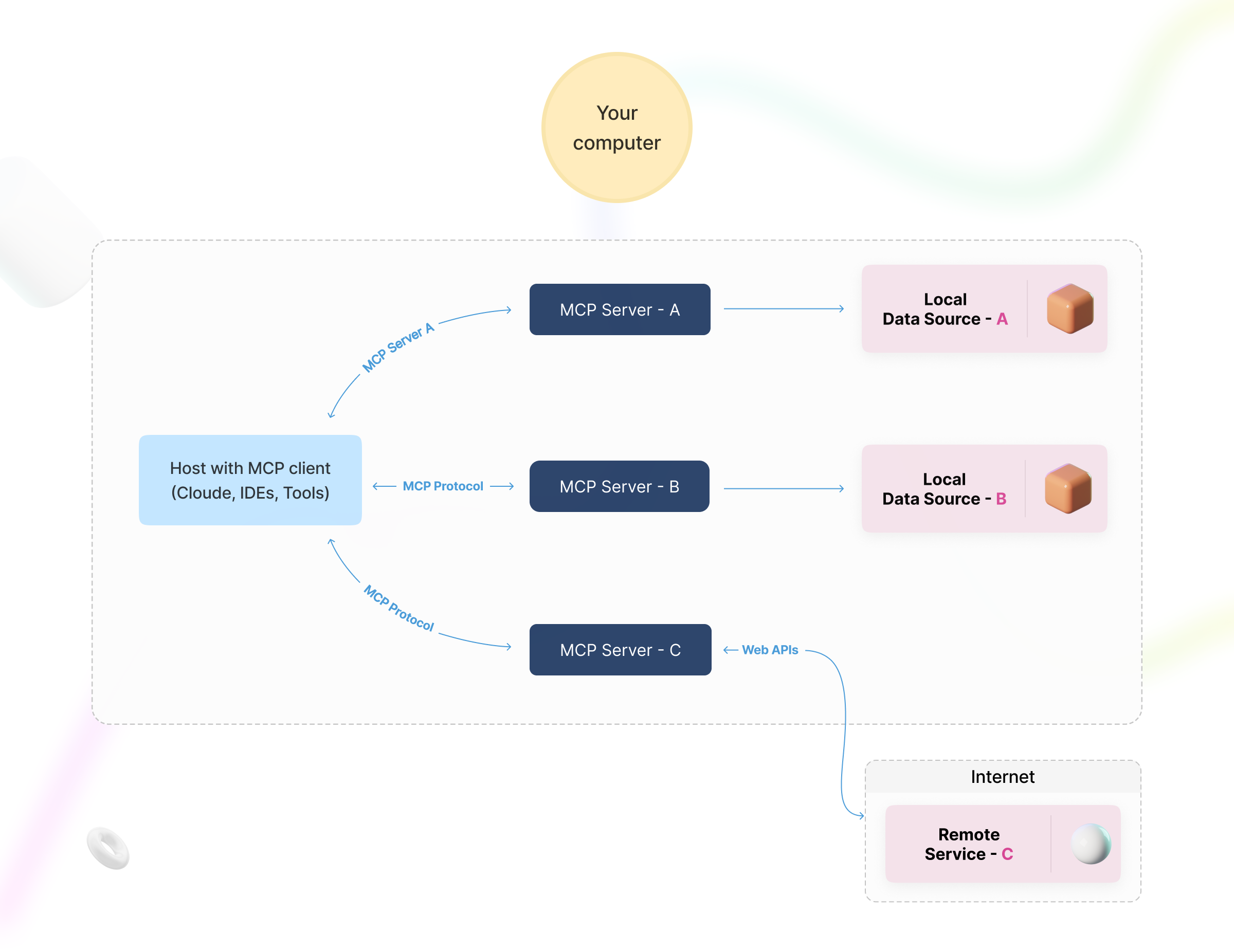

Enter MCP: A Modular, Standardized Protocol

MCP introduces a structured approach to connect LLMs to external services using a clean, modular client-server model. Think of it as a universal adapter that allows foundation models to operate in the real world.

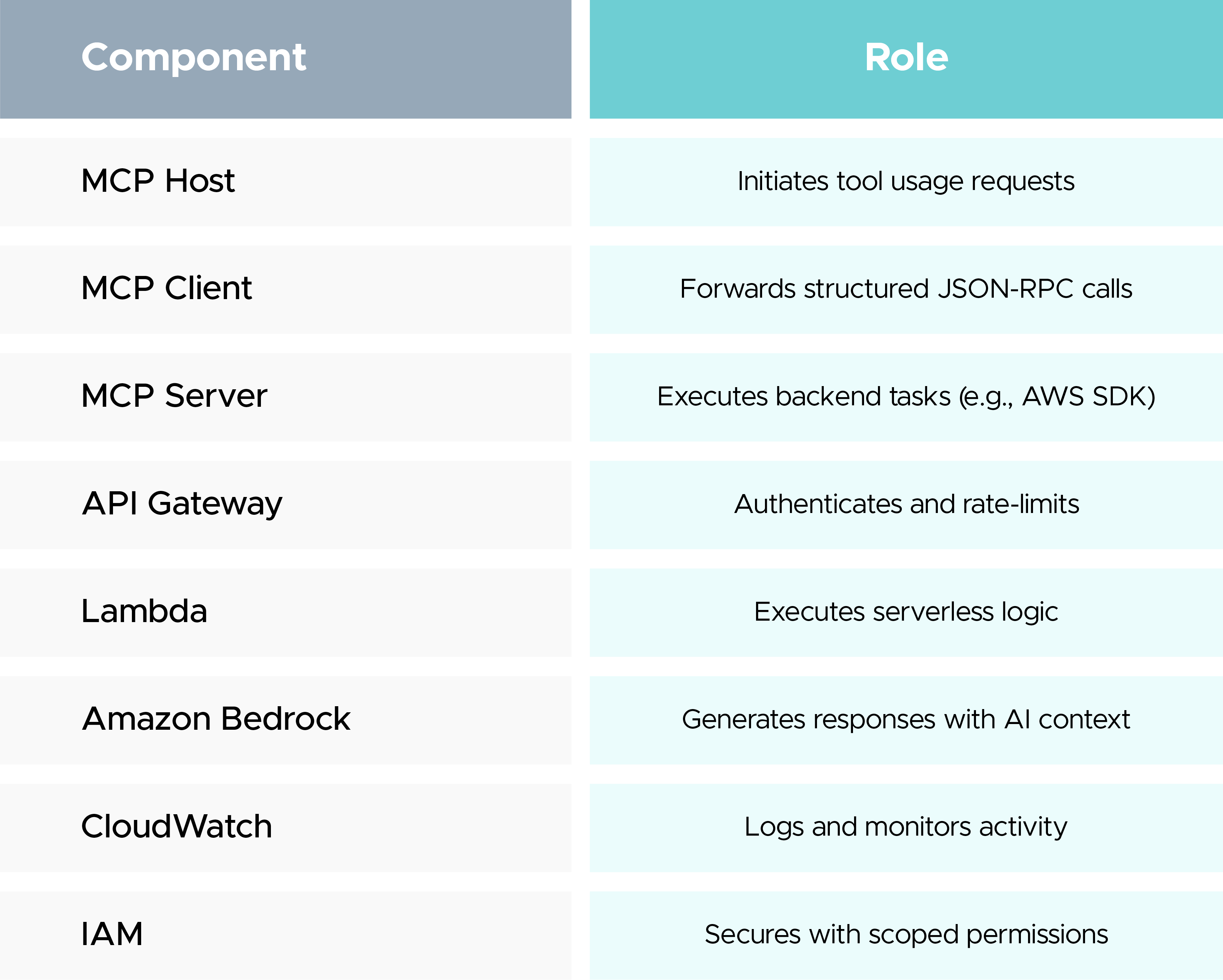

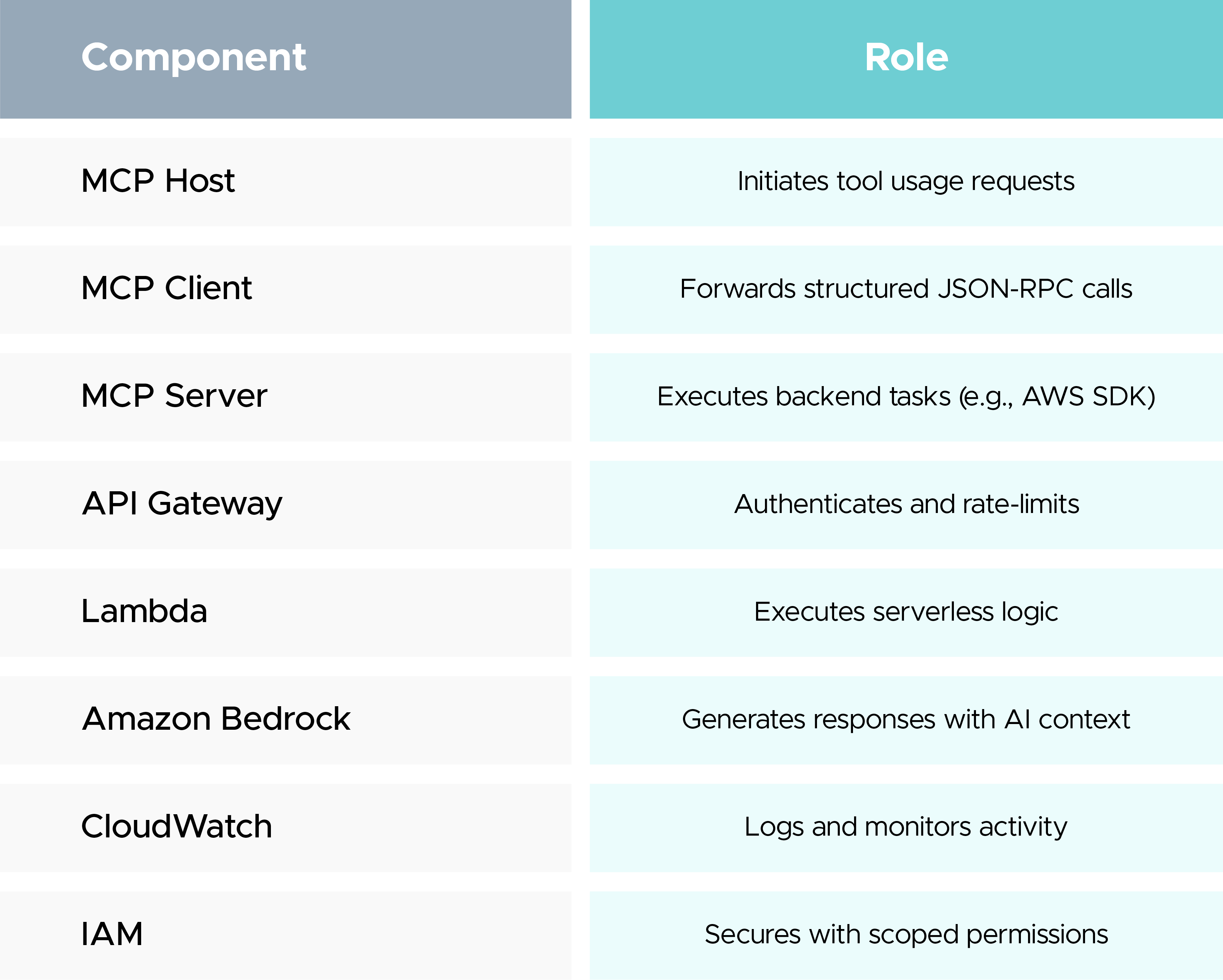

Core Components:

- MCP Host

The primary LLM-based app (e.g., a Bedrock chatbot) that initiates requests. - MCP Client

Translates the request into JSON-RPC 2.0 format and forwards it. - MCP Server

Receives the request and executes it by calling external APIs, querying databases, or performing computations. - External Services

AWS APIs, internal systems, third-party APIs, or custom scripts.

Transport Mechanisms:

- stdio: Used during local testing or development.

- Streamable HTTP: Used in production environments. Supports retries, fault-tolerance, and session tracking.

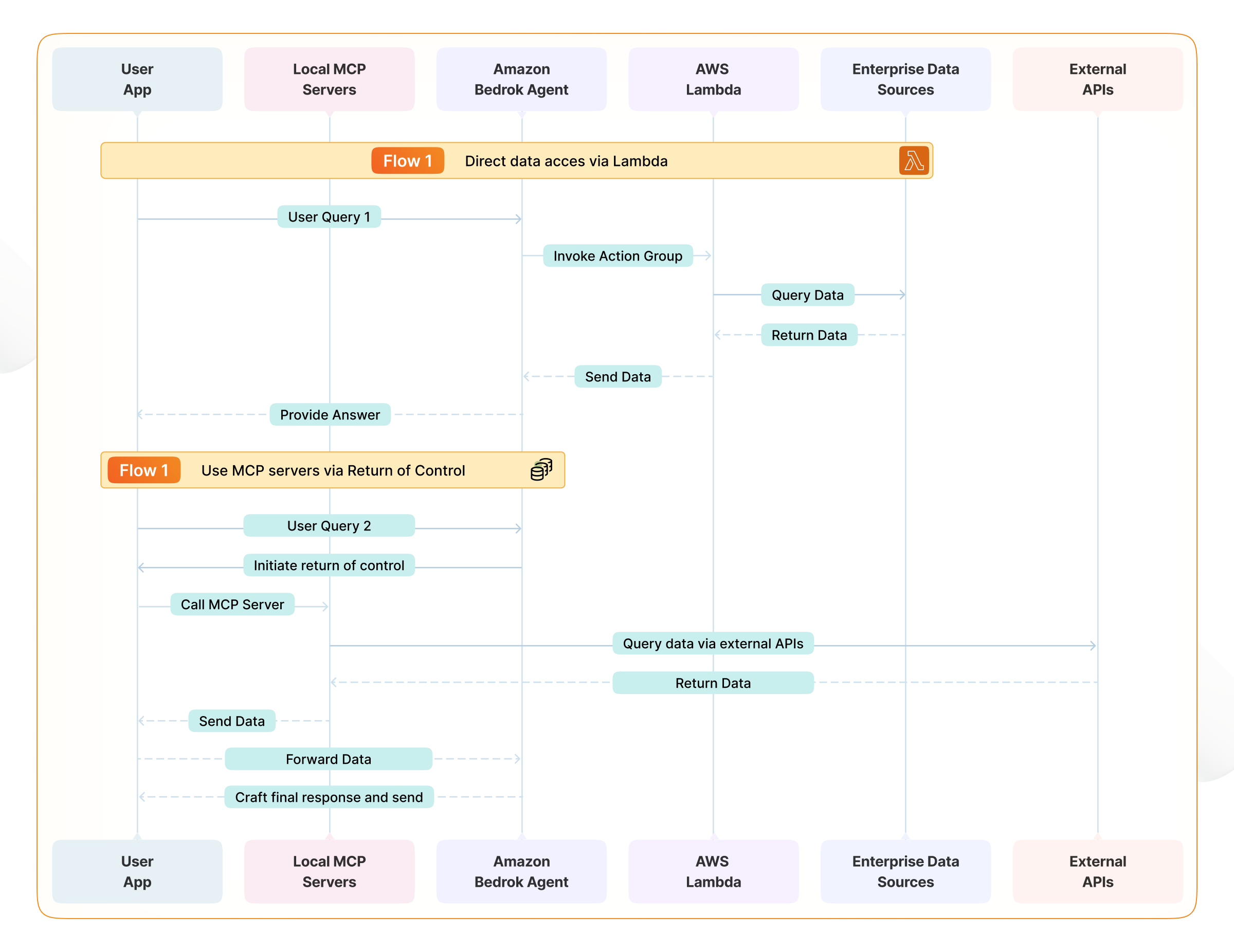

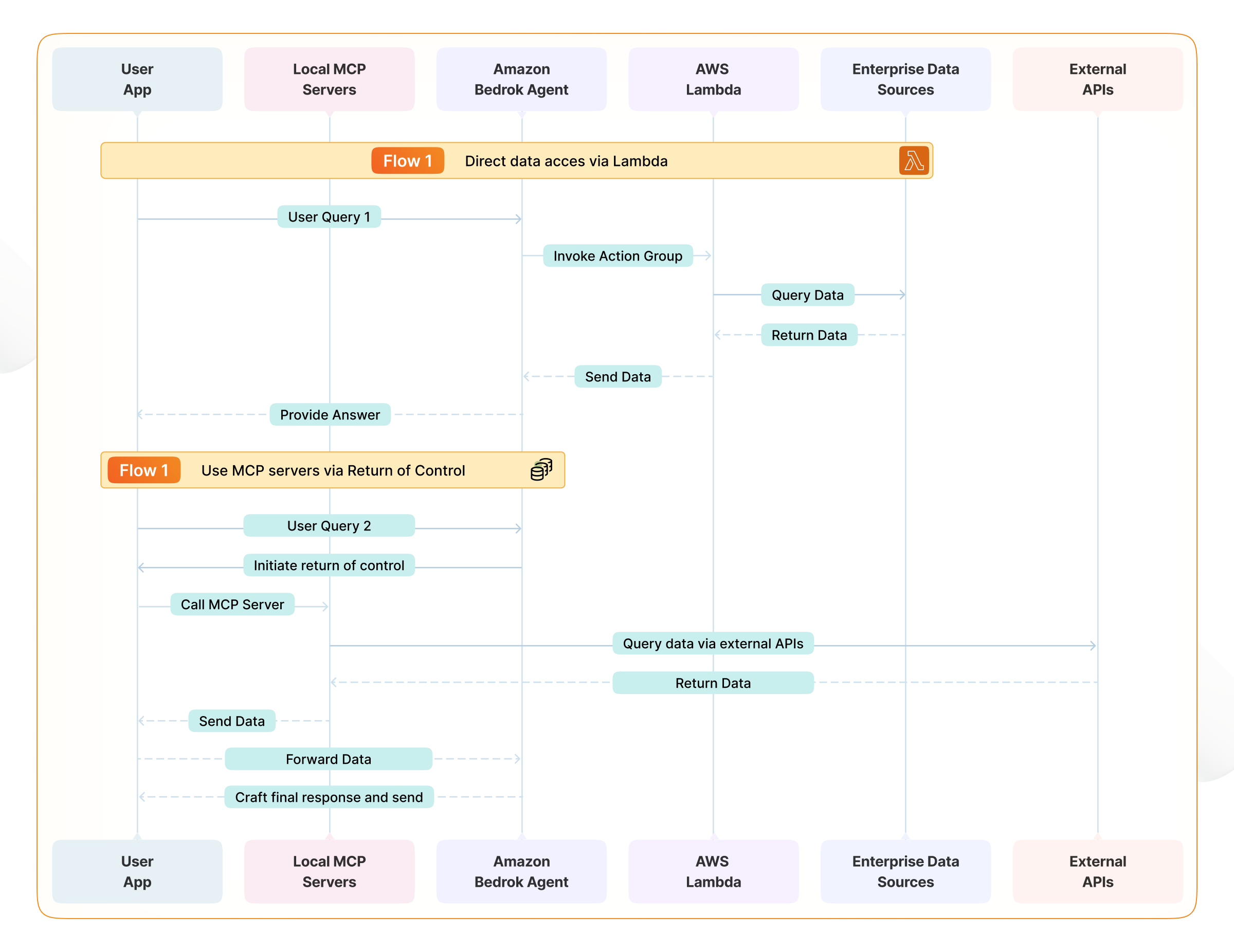

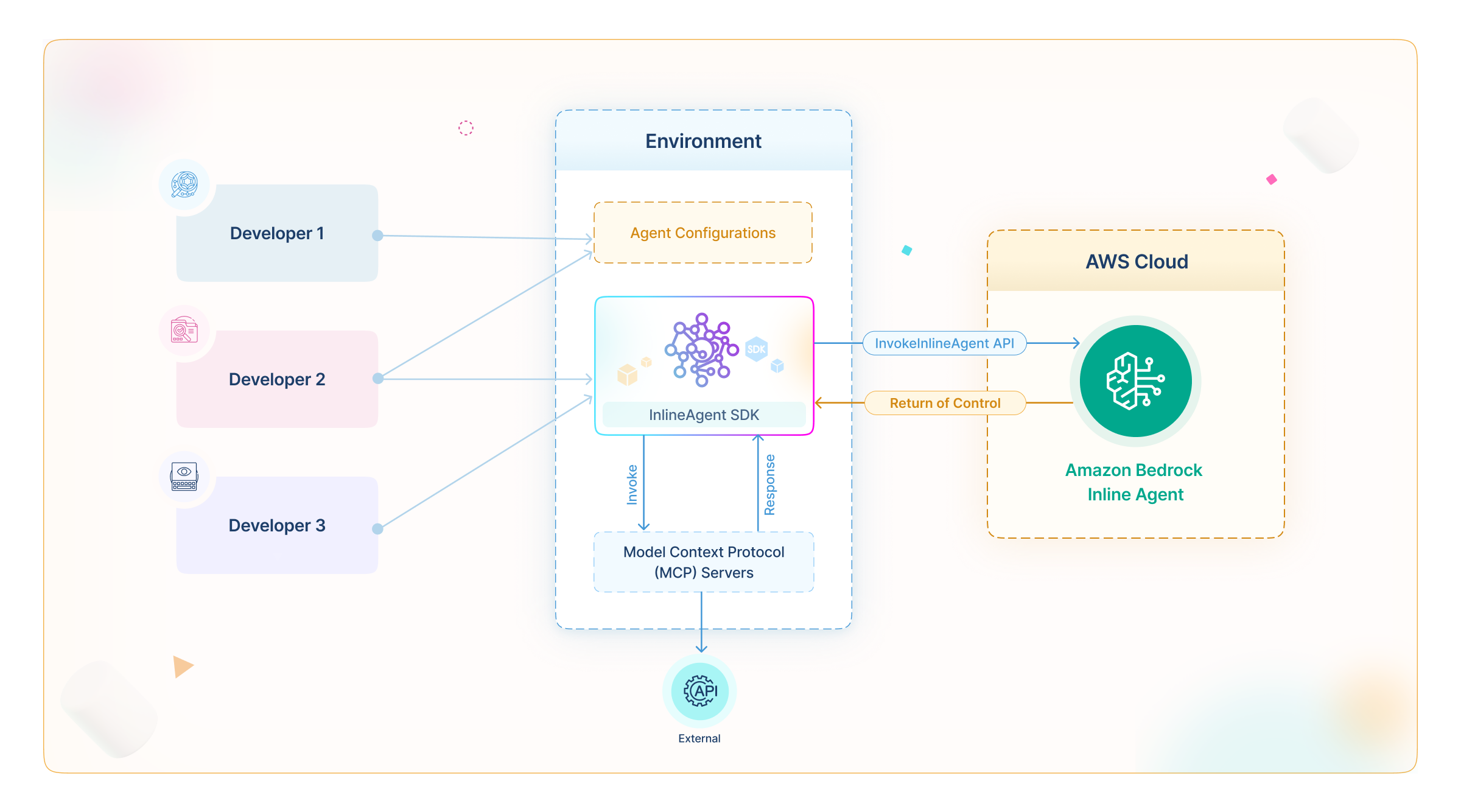

How MCP Integrates with Amazon Bedrock

Amazon Bedrock provides foundation models as managed services. With MCP, those models can now invoke live tools as part of their reasoning chain.

Example Workflow:

- A user asks: “Estimate AWS cost for my infrastructure.”

- The LLM issues a tool request: get_cost_estimate

- The MCP Client routes the call to an MCP Server.

- The MCP Server queries AWS Cost Explorer via SDK.

- The result is returned through the MCP Client back to the LLM.

- The LLM incorporates the data into its final, context-aware response.

This happens seamlessly within seconds. No manual API calls. No hardcoded logic.

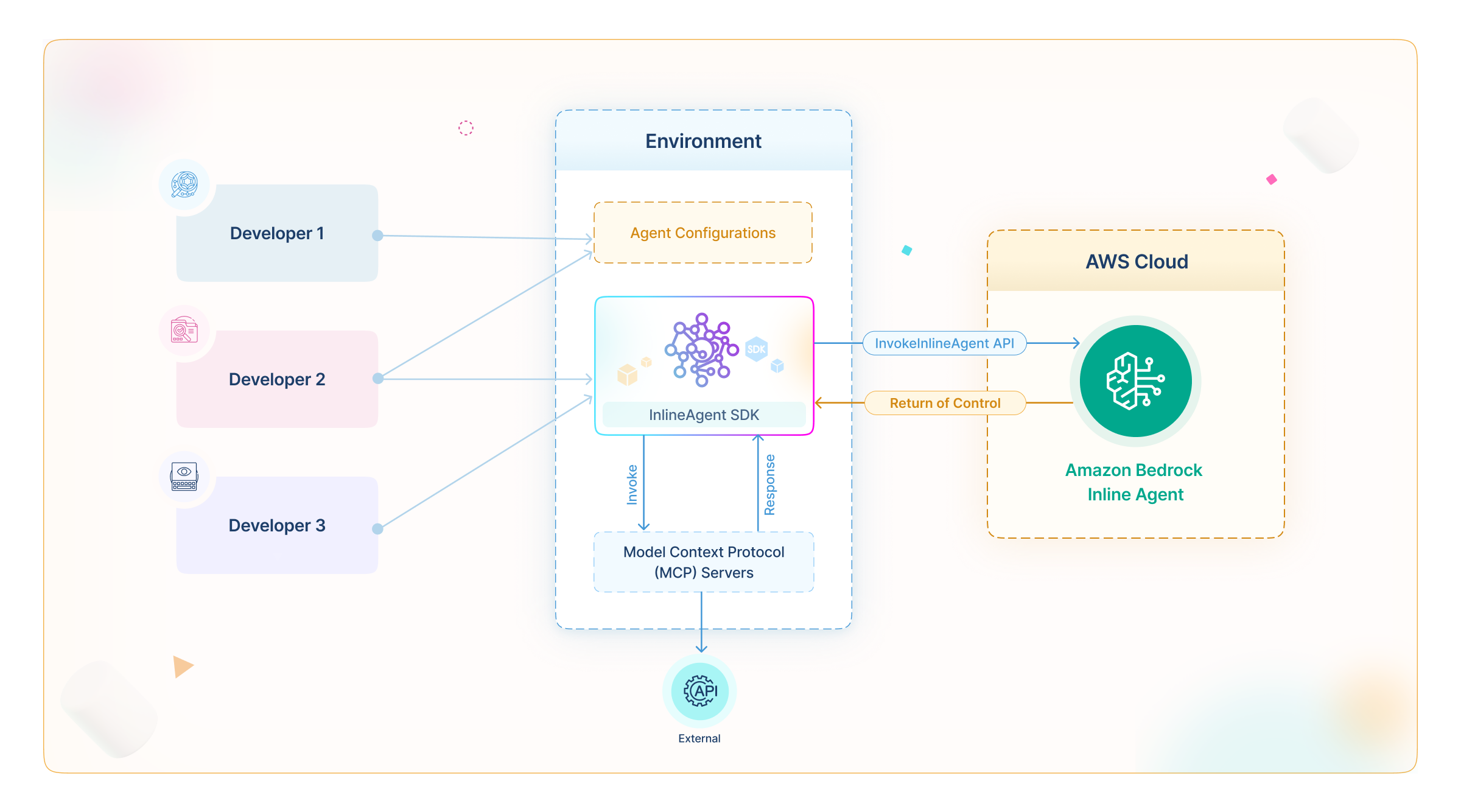

Real-World Architecture: MCP + Amazon Bedrock +AWS Lambda

To run this in production securely and at scale, AWS components are used to glue the system together.

Architecture Flow:

MCP Client → API Gateway → AWS Lambda (MCP Server) → AWS Services

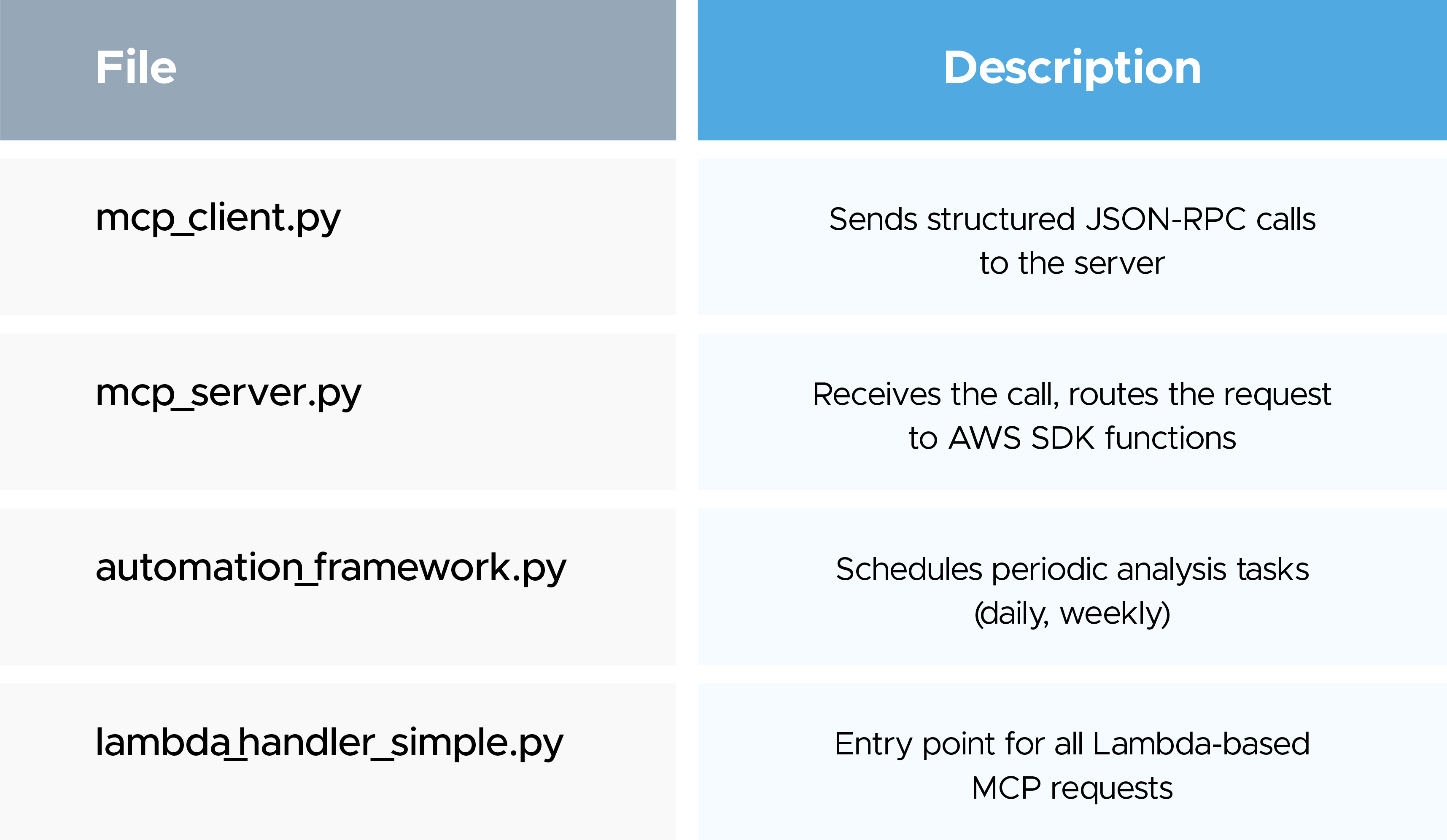

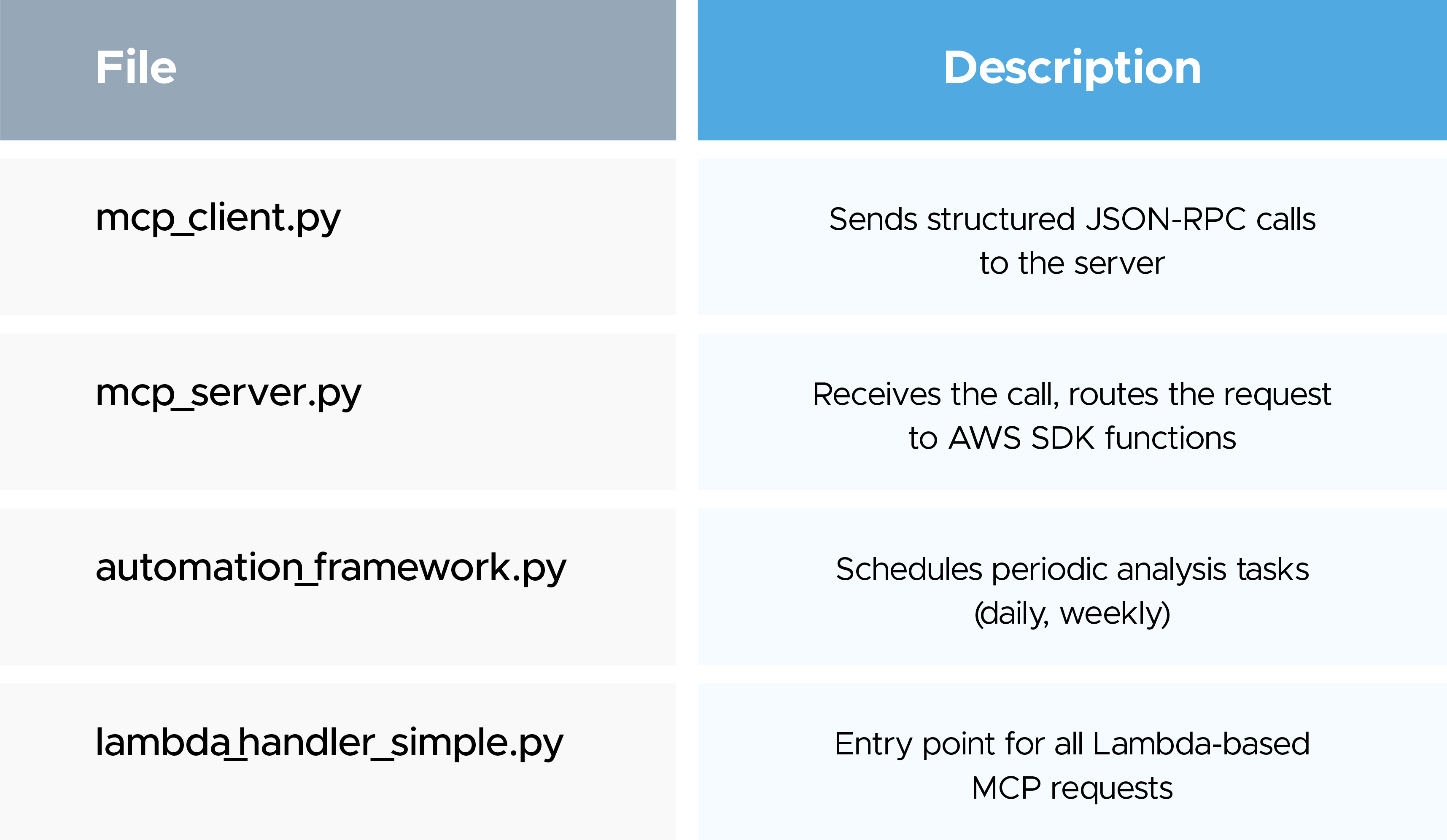

Core Code Components:

AWS Services Used:

- AWS Lambda: Stateless server execution

- API Gateway: Auth and rate-limiting

- CloudWatch: Logs and error tracking

- AWS IAM: Least privilege roles with secure secrets

- Amazon Bedrock: Foundation models for intelligent analysis

What We Learned: Best Practices for High Availability

Using MCP is not enough—you have to build it right. Here’s how to avoid common failure patterns:

Option 1: Harden Your MCP Configuration

1. Always Have More Than One MCP Server

Just like a single Aurora Reader causes downtime if it fails, a lone MCP server creates a single point of failure.

- Always deploy redundant MCP servers for critical tasks.

- Use fallback logic and exponential backoff in the client.

- Use smaller or idle-capable instances for redundancy if needed.

2. Set Priority Tiers

Not all requests are equal. Don’t treat them like they are.

- Separate high-priority (e.g., AWS cost) from low-priority (e.g., news feed).

- Assign priority tiers to queues or endpoints.

- Build logic for fast retries or safe degradation.

Option 2: Remove Bottlenecks with Clustered Endpoints

Instead of relying solely on a single RDS proxy (or MCP endpoint), consider clustered approaches.

- Use Bedrock’s orchestration tools (like Agents) to route requests.

- Keep servers stateless and scalable.

- Implement connection pooling at the application layer, not hardcoded in Lambdas.

Wrapping Up

Model Context Protocol bridges the gap between intelligent models and real-world action. But just like Aurora’s proxy failed during a Reader crash, even a powerful tool like MCP can fall short if not deployed with resiliency in mind.

Key Takeaways:

- MCP standardizes LLM-to-tool interactions.

- Amazon Bedrock enables seamless execution of complex workflows.

- Reliability depends on redundancy, failover strategies, and endpoint management.

Summary Table

What’s Next for MCP?

- Dynamic Server Discovery: Register and detect servers on the fly.

- Unified Security Model: Centralized authentication for all servers.

- Retry-Orchestration Layers: Better state management for long chains.

- Model-Driven Planning: Let the LLM decide the best toolpath dynamically.

If you’re ready to put LLMs to work in the real world, MCP is your foundation. Just don’t forget the lessons from production outages—redundancy, fallback logic, and structured protocols always win.

This article gives a nice overview of how the Model Context Protocol can work with platforms like Amazon Bedrock — it’s helpful to see concrete examples of how these pieces integrate. As I’ve been exploring how MCP server implementation and deployment work in real projects, I came across this resource here: https://mobisoftinfotech.com/services/mcp-server-development-consultati… which added some useful context around structured development and consultation. Thanks for sharing this insight!

When you play Speed Stars at https://speed-stars.io, you become a top speedrunner and fight in fast races to win difficult tracks. Do you think you're very good at time, speed, accuracy, and rhythm?

When you play Speed Stars at https://speed-stars.io , you become a top speedrunner and fight in fast races to win difficult tracks. Do you think you're very good at time, speed, accuracy, and rhythm?

In Build Now GG I landed the perfect prefire shot the moment someone opened their edit. It felt like pure luck but I’m pretending it was skill. https://buildnow-gg.io