Team CloudKeeper is a collective of certified cloud experts with a passion for empowering businesses to thrive in the cloud.

16

16It's 2026, and AI is no longer the novelty it was 10 years ago.

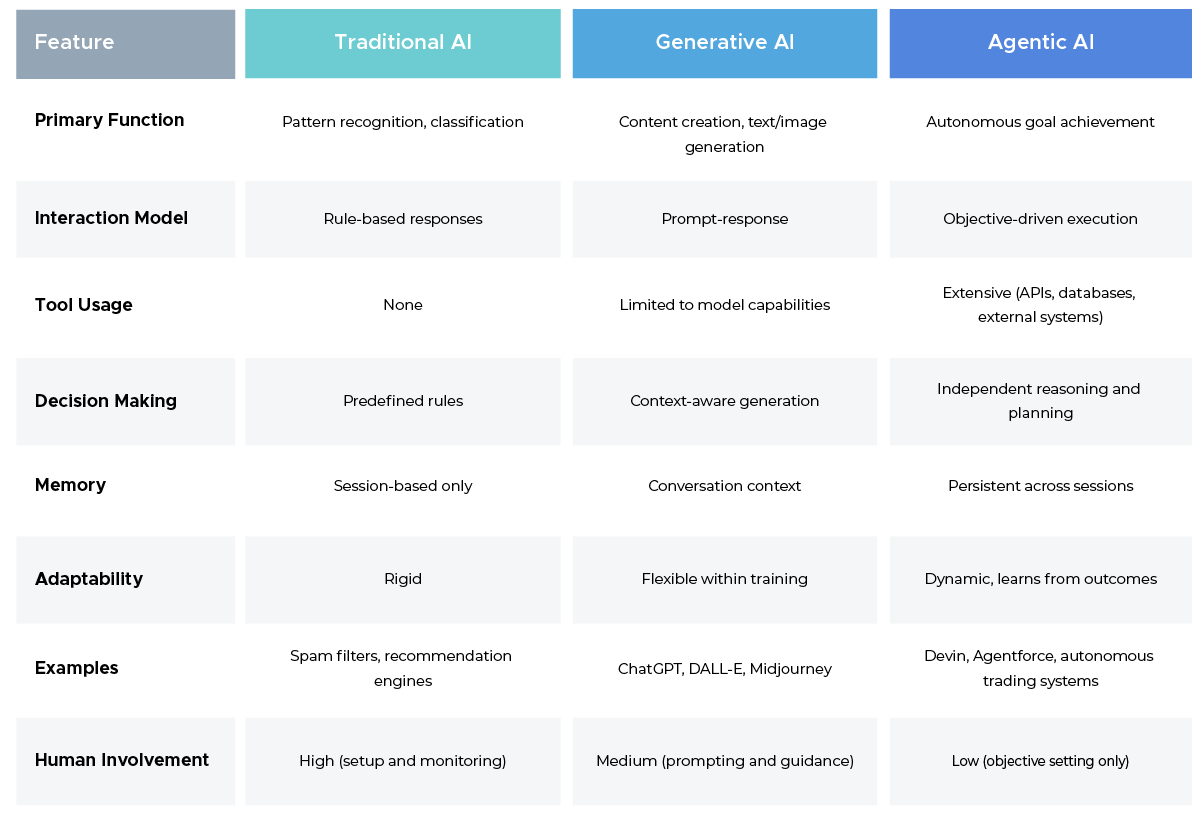

From copilots embedded in everyday software to LLM-powered search, chat, code, design, and analytics tools, artificial intelligence has been effectively democratized. Agentic AI represents the next major shift.

Unlike traditional AI systems that respond to prompts, Agentic systems can plan, decide, act, and iterate toward goals autonomously.

However, we’ve barely scratched the surface.

This blog explores Agentic AI in detail. It is a practical, end-to-end guide to Agentic AI—what it is, how it works, where it's being used today, and what it means for the future of software, automation, and cloud management platforms.

Traditional AI—also called narrow AI or weak AI—dominated from the 1950s through the early 2020s. These systems excelled at single, well-defined tasks within rigid parameters.

Some applications of traditional AI systems are:

OpenAI's GPT-3 launched in 2020 with 175 billion parameters. It could generate human-like text on virtually any topic. DALL·E created images from text descriptions. ChatGPT, released in 2022, became the fastest-growing consumer application in history.

Generative AI creates new patterns, which is the most overt highlight over traditional models. To put it into context, traditional AI models worked on pre-set patterns, but GenAI learns new ones too. Some of the use cases of Generative AI include:

But the Achilles’ heel for GenAI was that it couldn’t take action. The working of GenAI remained reactive—it would wait for you to enter a prompt, generate a response, and then the cycle continued.

The key highlight was that it improved if you gave a positive or negative response.

2025 was the “great coming” of Agentic AI.

AI agents moved from theory to production infrastructure. The definition shifted from academic concepts of systems that perceive, reason, and act to practical descriptions of large language models capable of using software tools and taking autonomous action.

The only major hiccup in “true artificial intelligence” was that it couldn’t act, and with Agentic Artificial Intelligence, that is what was solved. Agentic AI, unlike previous systems, can act on what it generates.

Some of the common use cases of Agentic AI are:

What we’re witnessing is the fourth major evolution in AI–human interaction: from rigid rule-following systems to autonomous agents that can reason, adapt, and take action across complex workflows.

It’s 2026 now, and in the years leading up to this point, there have been several key developments in Agentic AI that have shaped what it is today. These can broadly be split into two themes:

The Model Context Protocol (MCP) from Anthropic, released in late 2024, allowed developers to connect large language models to external tools in a standardized way.

DeepSeek-R1’s release in January disrupted assumptions about who could build high-performing LLMs. Chinese tech companies rapidly expanded the open-model ecosystem.

Google introduced Agent2Agent.

Agentic browsers appeared—Perplexity’s Comet, Browser Company’s Dia, and OpenAI’s GPT Atlas. These tools reframed the browser as an active participant rather than a passive interface.

Enterprises started seeing tangible results. Google Cloud’s 2025 survey, conducted across 3,466 senior leaders, confirmed that Agentic AI was no longer experimental. Companies deploying agents reported measurable productivity gains and cloud cost savings.

As has been the case with AI, today’s “star of the tech show,” Agentic AI, has developed incrementally rather than overnight.

Let's cut through the hype.

Agentic AI refers to AI systems that act autonomously to achieve defined goals. They don't just generate responses—they take action. They don't wait for constant human input—they pursue objectives independently.

These systems combine LLMs with external tools, memory, planning capabilities, and feedback loops. They can break down complex goals into manageable tasks. Execute those tasks using appropriate tools. Observe outcomes. Adjust their approach. Iterate until the goal is achieved.

Think of it this way: Generative AI is like having a smart consultant while Agentic AI is like having an employee who actually does the work.

The key difference? Agency.

Agency means autonomy with purpose.

Traditional AI and GenAI are reactive. meaning they respond to inputs. Agentic AI, however, is proactive, which means it initiates actions based on goals, not prompts.

Agency involves several components:

Agency transforms AI from an assistant into an actor. That's the revolution.

Chatbots wait for you to type a question.

Copilots suggest completions as you work.

Agents? They take over entire workflows.

Here are two of the most impacted areas, which have the market on the edge of its seat, with everyday interactions being handled by Agentic systems:

Agentic AI closes the loop that previous generations left open. It no longer hands off instructions to humans—it carries them out end to end.

The interaction model has shifted from conversation to collaboration. Instead of prompting, you’re delegating.

The evolution happened in stages.

"Press 1 for billing. Press 2 for support."

These systems followed decision trees and delivered clear ROI by deflecting simple support tickets. But throw a curveball at them, which was a question they don’t know, and you’d see the system break down immediately.

Natural language understanding improved significantly, and chatbots started handling much more complex interactions. Most common examples of conversational AI systems are Siri and Alexa.

LLMs like GPT-3 and ChatGPT generated human-quality responses. They could explain concepts, write code, and analyze documents. Conversational depth increased dramatically.

The real evolution with Agentic AI is the independence it has gained to solve problems end-to-end.

What Agentic AI is now empowered to do is:

Example: Party planning. A chatbot can suggest recipes. An agent, on the other hand, checks your calendar, emails friends to coordinate dates, orders groceries through an API, creates a Spotify playlist based on guest preferences, and sends calendar invitations. It executes the entire project.

That’s the shift from read-only to read-write. From suggesting to doing.

Why Chatbots and Copilots Were Limited

No memory beyond the conversation: Each session started fresh. Context disappeared the moment you closed the chat window.

Copilots improved on some of these. GitHub Copilot suggests code. It's useful. But it doesn't architect the system, write tests, deploy to production, and monitor for errors. You still do the heavy lifting.

Agents remove these constraints. They access tools, remember, plan, execute, and persist until goals are achieved.

Traditional AI follows a simple pattern: Input → Process → Output.

Agentic AI operates in loops: Perceive → Reason → Act → Observe → Adjust → Repeat.

Let's break that down.

This feedback loop enables agents to handle dynamic environments and complex, multi-step tasks that would defeat reactive systems.

Prompting and objective-setting might look similar on the surface, but they’re really not.

A prompt is a very specific instruction, like: “Write a product description for noise-canceling headphones.” An objective, on the other hand, is a goal: “Increase headphone sales by 20% this quarter.”

When you work with prompts, you’re telling the AI exactly what to do. You’re still thinking, planning the steps, and deciding the direction. The AI is mostly just executing what you asked for.

With objectives, the dynamic changes completely. You tell the AI what outcome you want, and it figures out how. It might research competitor pricing, analyze customer reviews, generate multiple product descriptions, run A/B tests, identify the best-performing version, and then deploy it across your marketing channels. Same outcome on paper. Completely different process underneath.

This is where planning comes in. Objectives require agents to actually think in terms of steps: breaking a big goal into smaller tasks, prioritizing them, executing them in the right order, handling dependencies, and even dealing with things when they fail or don’t go as expected.

And that’s really the core difference. Assistants need instructions, whereas Agents need objectives.

The mechanics involve several interconnected components.

First, the agent must comprehend what you're asking for.

This goes beyond parsing language. The agent needs to understand intent, context, constraints, and success criteria. "Book the cheapest flight" is different from "Book a direct flight leaving after 2 PM." The agent must extract these nuances.

LLMs handle this through their training on vast text corpora. They've learned how humans express goals, how to interpret ambiguous requests, and how to ask clarifying questions when needed.

Once an objective is clear, the agent breaks it into manageable steps.

Say your objective is "Analyze Q4 sales performance and identify improvement opportunities." The agent might decompose this into:

Task decomposition requires understanding dependencies. You can't analyze data before retrieving it. You can't generate visualizations before calculating metrics.

Agentic systems use planning algorithms—some inspired by classical AI planning, others using LLM reasoning—to create execution plans.

With a plan established, the agent executes.

This is where tool usage becomes critical. An agent needs access to databases, APIs, code execution environments, email systems, document editors, and whatever else the task requires.

The Model Context Protocol and similar standards enable this. They provide structured ways for agents to call functions, pass parameters, and receive responses.

After each action, the agent observes outcomes. Did the database query succeed? What data was returned? Are there errors? How does this affect the plan?

Observation informs the next action. If a query returned no data, the agent might try alternative date ranges or data sources. If an API call failed, it might retry with different parameters or switch to a backup service.

Agents don't follow rigid scripts.

They adapt based on what happens. If the initial plan isn't working, they revise it. If they discover new information midway through, they incorporate it. If they hit a dead end, they backtrack and try alternatives.

This iterative process is what makes agents robust. Static workflows break when encountering unexpected conditions. Agents adjust.

Reinforcement learning principles often guide this adaptation. Actions that move toward the goal are reinforced. Actions that don't are deprioritized.

Some advanced agents even learn from deployment. They analyze which strategies worked in past situations and apply those patterns to new problems. This on-the-job learning allows agents to improve over time without explicit retraining.

Theory is one thing. Practice is another. Here's where agentic AI is actually being deployed in 2026.

Software Development

AI coding agents like Devin from Cognition Labs and enhanced versions of GitHub Copilot now write, test, and deploy code autonomously. The agents handle boilerplate, while humans focus on system design and complex logic.

Customer Service

Companies like Salesforce deployed Agentforce in 2025, handling customer inquiries from end to end. The system researches issues, implements fixes, updates records, and follows up—without human intervention except in edge cases.

Financial Services

Credit analysis agents evaluate loan applications by pulling data from dozens of sources, applying complex scoring models, and generating approval recommendations—all in minutes rather than days.

Healthcare

Diagnostic support agents analyze patient histories, lab results, imaging studies, and medical literature to suggest differential diagnoses and recommend tests. They don't replace physicians but augment clinical decision-making.

Cloud Operations

This is where things get particularly interesting for cloud FinOps and cloud cost optimization.

Infrastructure agents monitor cloud resources continuously. They detect idle instances, identify oversized resources, recommend reserved instance purchases, and automatically implement optimizations during maintenance windows.

Massive productivity gains : Agents handle routine work that consumes human hours. One supply chain company reported that agentic optimization saved $47,000 monthly by automatically adjusting logistics in response to real-time conditions.

24/7 operation. Agents don't sleep. They monitor, analyze, and act around the clock. Problems get addressed immediately, not during business hours.

Security risks: Chinese hackers used Anthropic's Claude AI tool to break into 30 companies and government agencies last year. Agents with broad system access become attractive attack vectors.

Prompt injection attacks can manipulate agents into unauthorized actions. An agent with database access could be tricked into deleting records or exfiltrating data.

The key is thoughtful deployment. Here’s how you should proceed :

Agentic AI represents a shift from reactive cost control to autonomous cloud cost optimization. Instead of just flagging issues, these systems can analyze, decide, and act - optimizing spend, performance, and governance across the cloud.

Where does this go from here?

The organizations moving now are building the muscle and governance frameworks while there's time to learn. Those waiting will spend years catching up.

Everything we've discussed—the planning, the tool usage, the autonomous execution—applies directly to cloud cost management.

CloudKeeper recognized this opportunity early. Instead of making you navigate dashboards, export CSVs, and manually analyze spending patterns, we built LensGPT: an agentic AI FinOps consultant. LensGPT combines the power of multiple AI tools.

It's conversational cloud FinOps. No SQL queries. No complex filters. No dashboard archaeology. Just ask questions in natural language and get complete answers. Just like you chat with a colleague and get answers the way you would from a seasoned FinOps consultant.

The platform goes beyond simple cost reporting:

Learn more at cloudkeeper.com/cloudkeeper-lensgpt

Agentic AI is going to be the next big thing, potentially even eclipsing the dot-com revolution of the 90s in terms of its unprecedented boost in productivity, reach, and accessibility.

But unlike the fearmongering that goes around—that Agentic AI will replace humans in terms of their dexterity, creativity, and uniqueness—that narrative is largely a marketing gimmick pushed by those who have billions invested in it. In reality, Agentic AI is a tool that augments human effort and makes individuals far more productive, not obsolete.

For organizations, the coming decade will belong to those that master human–agent collaboration to boost productivity, ship products faster and with fewer bugs, using AI to trim cloud costs and deliver better customer experiences.

Speak with our advisors to learn how you can take control of your Cloud Cost

Overall, Retro Bowl is a well-crafted football game that proves you don’t need flashy graphics to deliver an exciting and rewarding sports experience. https://retrobowlplus.com